Recently I read a book called "Beautiful Data" (the Story behind Elegant Solutions) a good book, visualization from to the basic until intermediate chapters, easy reading, and with some examples in many chapters.

The firsts chapters is about how the world is constantly watched by many apps that tracks informations, and many studies observing pattern in consumption, text (twitter, facebook status), and locations. That's isn't new, but this perspective let him explain new patterns and a little more show how these persons did it that?.

this isn't a new book, was published at 2009, so many of examples like the chapter sixteen, not avalaible:

The book Explain that R have many tools for do that, here some examples:

the command above makes a scatter plot matrix:

The firsts chapters is about how the world is constantly watched by many apps that tracks informations, and many studies observing pattern in consumption, text (twitter, facebook status), and locations. That's isn't new, but this perspective let him explain new patterns and a little more show how these persons did it that?.

this isn't a new book, was published at 2009, so many of examples like the chapter sixteen, not avalaible:

but the code that show could be usefull for applied to another dataset, for exploratory analize, I used the dataset about the wikipedia campaign vs amount obtain.

The book Explain that R have many tools for do that, here some examples:

url='http://samarium.wikimedia.org/campaign-vs-amount.csv'; wiki = read.delim(url,sep=","); plot(wiki);

the command above makes a scatter plot matrix:

This should be a first look of the data, 9 variables here could observe that some variables looks like a data type factor or date and not numeric. columns medium, campaign, stop_data, start_date should be cast in the correct datatype, always is better do it.

for this we could use: as.factor(variable), as.numeric(variable), as.Date(variable)

hist(wiki$usdmax) # make a histogram

however we could see, that this doesn't look right, so we could look for extrem values or to see more distribuited the data.

index = which(wiki$usdmax < 800) wikiless = wiki[index,] hist(wikiless$usdmax)

so later we could see for what medium wikipedia get more donations:

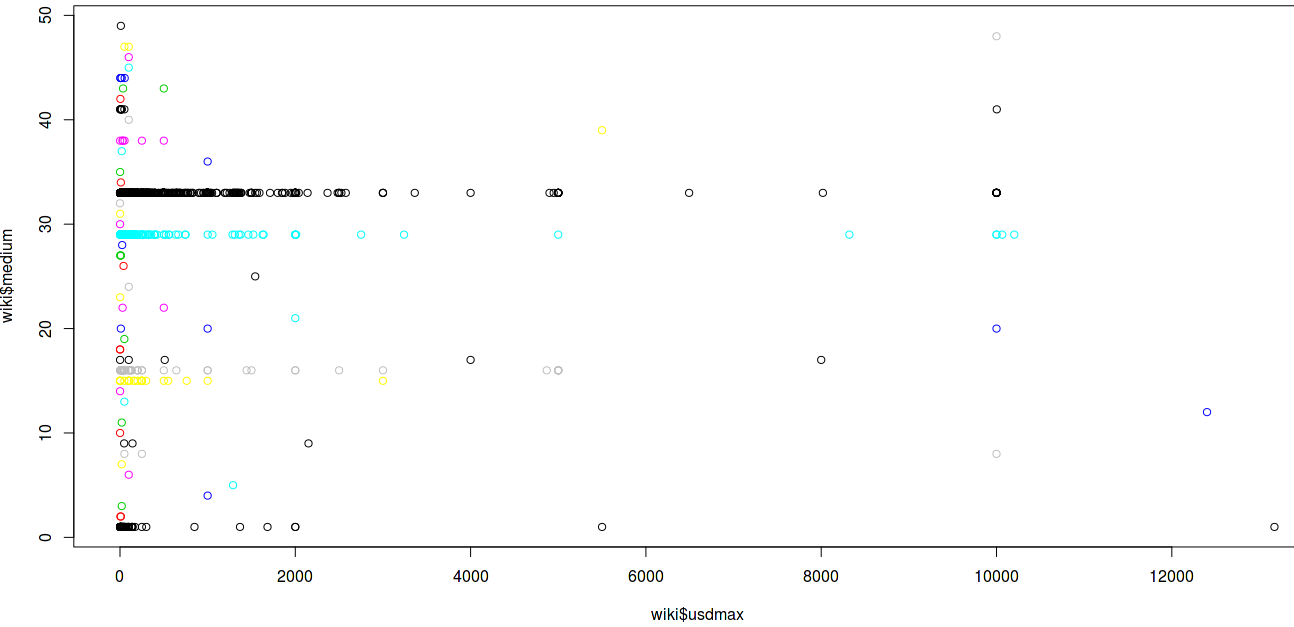

plot(wiki$avg, wiki$medium, col=wiki$medium) #(Imagen 1) plot(wiki$usdmax, wiki$medium, col=wiki$medium) # (imagen 2) summary(wiki$medium)

( imagen 1)

(imagen 2)

In the summary we could see which medium had more donations, and that was via email and via sitenotice.

library(ggplot2);

qplot(wiki$sum, wiki$medium);

qplot(wiki$avg, wiki$medium, col=wiki$medium);

qplot(wiki$usdmax, wiki$medium, col=wiki$medium);

smoothScatter(log(wiki$count), log(wiki$avg));

smoothScatter(log(wiki$count), log(wiki$avg),

colramp=colorRampPalette(c('white', 'blue')));

smoothScatter(log(wiki$count), log(wiki$usdmax),

colramp=colorRampPalette(c('white', 'deeppink')));

Here a simple plot made with ggplot2 library, in a easy form, something like that:

wiki$stop_date = as.numeric(wiki$stop_date) wiki$start_date = as.numeric(wiki$start_date) wikinumeric = wiki[,-c(1,2)] cors = cor(wikinumeric, use='pair') require(lattice) levelplot(cors) #image(cors, col=col.corrgram(7)) #this doesn't work now is: # rainbow, heat.colors, topo.colors, terrain.colors image(cors, col = heat.colors(7)) axis(1, at=seq(0,1, length=nrow(cors)), labels=row.names(cors))

All this command and scripts could be found in the chapter seventeen of the book.

Data could be find here:

And R Code here:

https://github.com/j3nnn1/homework/blob/master/withR/vis/beautiful_data.R